Biography

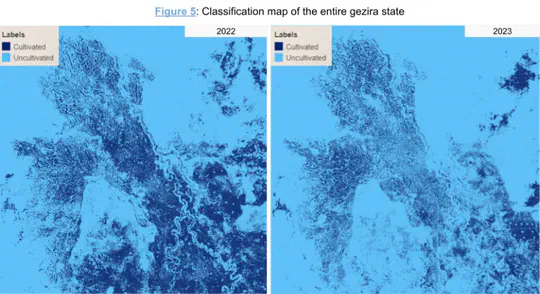

I’m an AI and machine learning engineer with a passion for driving real-world impact through responsible innovation. NLP and efficient large language models are my specialties – I love democratizing software creation through my work. I’m currently pursuing a MSc in AI, researching efficient fine-tuning of transformers for code generation. In my roles so far, I’ve applied ML to agriculture, NLP, and software engineering challenges. Outside of work, I’m fascinated by the societal implications of AI and actively explore issues like algorithmic bias and responsible ML. I love connecting with fellow researchers pushing the boundaries of AI for good. When I’m not coding, you can find me in the cinema or on the football pitch. I’m always eager to take on new challenges and collaborate with thoughtful teams to advance the equitable use of AI.

- Multi-Modal Machine Learning

- Multilingual NLP

- Responsible AI

- Geospatial Analysis

MSc in Artificial Intelligence, 2022

University of Edinburgh

BSc in Electrical and Electronic Engineering - Software Engineering, 2014

University of Khartoum

Skills

90%

100%

10%

Experience

Projects

Featured Publications

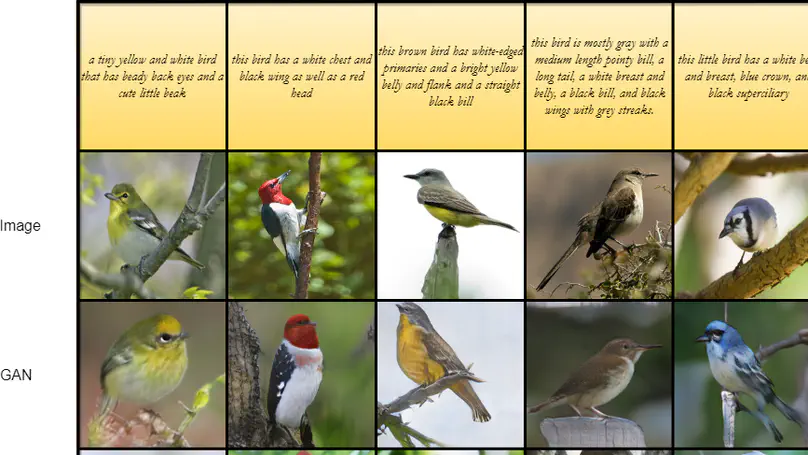

We use the Caltech birds dataset to run experiments on both models and validate the effectiveness of our proposal. Our model boosts the original AttnGAN Inception score by +4.13% and the Fréchet Inception Distance score by +13.93%.

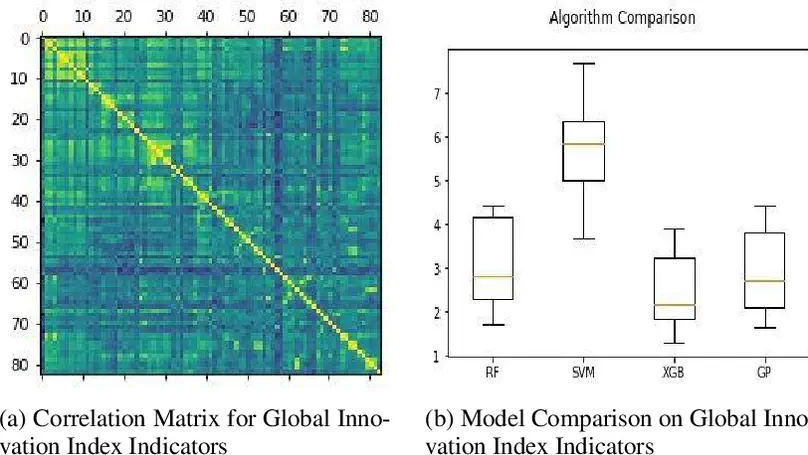

In this paper we discuss the concepts and emergence of Innovation Performance, and how to quantify it, primarily working with data from the Global Innovation Index, with emphasis on the African Innovation Performance. We briefly overview existing literature on using machine learning for modeling innovation performance, and use simple machine learning techniques, to analyze and predict the “Mobile App Creation Indicator” from the Global Innovation Index, by using insights from the stack-overflow developers survey. Also, we build and compare models to predict the Innovation Output Sub-index, also from the Global Innovation Index.

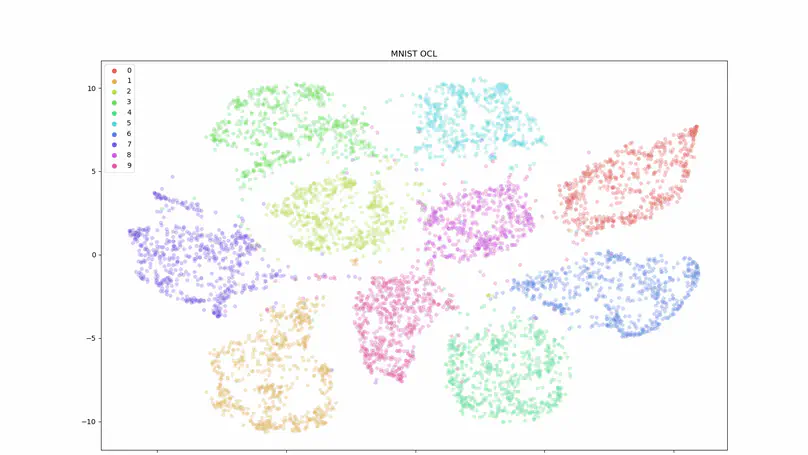

Benefitting from the orthogonal properties of Kasami codes, and considering that latent representations generated by the network for a data point that belongs to more than one class can b approximated as the sum of the individual latent representations learned during training for all the classes present in this multi-label data point, we trained neural networks only on single label images then tested it with multi-label images without additional multi-label training.

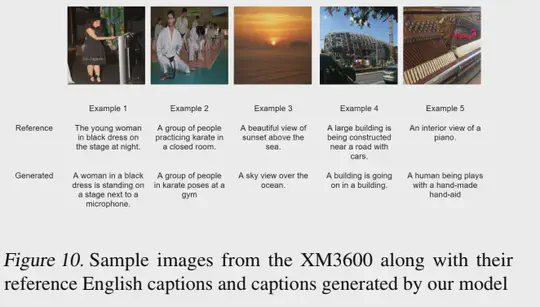

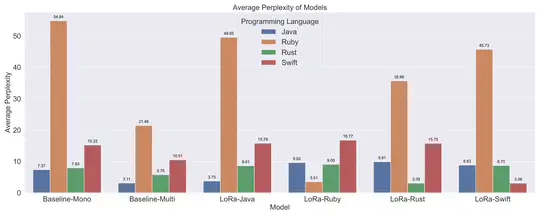

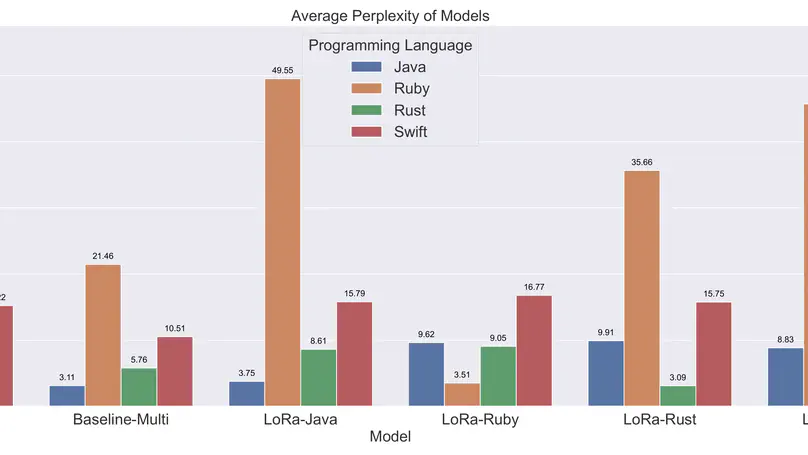

The democratization of AI and access to code language models have become pivotal goals in the field of artificial intelligence. Large Language Models (LLMs) have shown exceptional capabilities in code intelligence tasks, but their accessibility remains a challenge due to computational costs and training complexities. This paper addresses these challenges by presenting a comprehensive approach to scaling down Code Intelligence LLMs.

Contact

Send me a message and I’ll get back to you as soon as possible.